In WCF, collection data that is passed through the service boundary goes through a type filter - meaning you will not necessarily get the intrinsic service side type on the client, even if you're expecting it.

No matter if you throw back an int[] or List<int>, you will get the int[] by default on the client.

The main reason is that there is no representation for System.Collections.Generic.List or System.Collection.Generic.LinkedList in service metadata. The concept of System.Collection.Generic.List<int> for example, actually does not have a different semantic meaning from an integer array - it's still a list of ints - but will allow you to program against it with ease.

Though, if one asks nicely, it is possible to guarantee the preferred collection type on the client proxy in certain scenarios.

Unidimensional collections, like List<T>, LinkedList<T> or SortedList<T> are always exposed as T arrays in the client proxy. Dictionary<K, V>, though, is regened on the client via an annotation hint in WSDL (XSD if we are precise). More on that later.

Let's look into it.

WCF infrastructure bends over backwards to simplify client development. If the service side contains a really serializable collection (marked with [Serializable], not [DataContract]) that is also concrete (not an interface), and has an Add method with the following signatures...

public void Add(object obj);

public void Add(T item);

... then WCF will serialize the data to an array of the collections type.

Too complicated? Consider the following:

[ServiceContract]

interface ICollect

{

[OperationContract]

public void AddCoin(Coin coin);

[OperationContract]

public List<Coin> GetCoins();

}

Since the List<T> supports a void Add<T> method and is marked with [Serializable], the following wire representation will be passed to the client:

[ServiceContract]

interface ICollect

{

[OperationContract]

void AddCoin(Coin coin);

[OperationContract]

Coin[] GetCoins();

}

Note: Coin class should be marked either with a [DataContract] or [Serializable] in this case.

So what happens if one wants the same contract on the client proxy and the service? There is an option in the WCF proxy generator, svcutil.exe to force generation of class definitions with a specific collection type.

Use the following for List<T>:

svcutil.exe http://service/metadata/address

/collectionType:System.Collections.Generic.List`1

Note: List`1 uses back quote, not normal single quote character.

What the /collectionType (short /ct) does, is forces generation of strongly typed collection types. It will generate the holy grail on the client:

[ServiceContract]

interface ICollect

{

[OperationContract]

void AddCoin(Coin coin);

[OperationContract]

List<Coin> GetCoins();

}

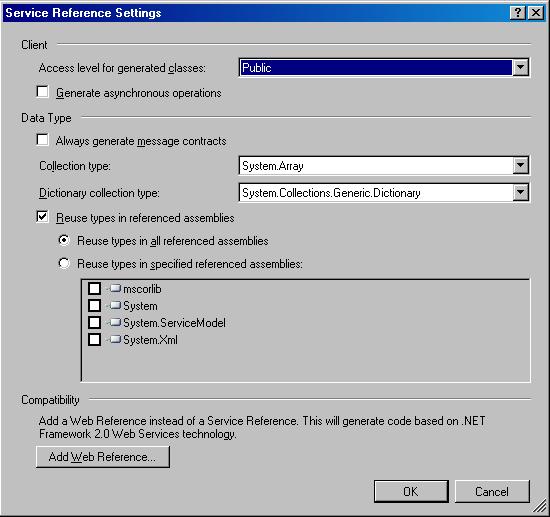

In Visual Studio 2008, you will even have an option to specify which types you want to use as collection types and dictionary collection types, as in the following picture:

On the other hand, dictionary collections, as in System.Collections.Generic.Dictionary<K, V> collections, will go through to the client no matter what you specify as a /ct parameter (or don't at all).

If you define the following on the service side...

[OperationContract]

Dictionary<string, int> GetFoo();

... this will get generated on the client:

[OperationContract]

Dictionary<string, int> GetFoo();

Why?

Because using System.Collections.Generic.Dictionary probably means you know there is no guarantee that client side representation will be possible if you are using an alternative platform. There is no way to meaningfully convey the semantics of a .NET dictionary class using WSDL/XSD.

So, how does the client know?

In fact, the values are serialized as joined name value pair elements as the following schema says:

<xs:complexType name="ArrayOfKeyValueOfstringint">

<xs:annotation>

<xs:appinfo>

<IsDictionary

xmlns="http://schemas.microsoft.com/2003/10/Serialization/">

true

</IsDictionary>

</xs:appinfo>

</xs:annotation>

<xs:sequence>

<xs:element minOccurs="0" maxOccurs="unbounded"

name="KeyValueOfstringint">

<xs:complexType>

<xs:sequence>

<xs:element name="Key" nillable="true" type="xs:string" />

<xs:element name="Value" type="xs:int" />

</xs:sequence>

</xs:complexType>

</xs:element>

</xs:sequence>

</xs:complexType>

<xs:element name="ArrayOfKeyValueOfstringint"

nillable="true" type="tns:ArrayOfKeyValueOfstringint" />

Note: You can find this schema under types definition of the metadata endpoint. Usually ?xsd=xsd2, instead of ?wsdl will suffice.

As in:

<GetFooResponse>

<KeyValueOfstringint>

<Key>one</Key>

<Value>1</Value>

<Key>two</Key>

<Value>2</Value>

<!-- ... -->

<Key>N</Key>

<Value>N</Value>

</KeyValueOfstringint>

<GetFooResponse>

The meaningful part of type service-to-client-transportation resides in <xs:annotation> element, specifically in /xs:annotation/xs:appinfo/IsDictionary element, which defines that this complex type represents a System.Collections.Generic.Dictionary class. Annotation elements in XML Schema are parser specific and do not convey any structure/data type semantics, but are there for the receiver to interpret.

This must be one of the most excellent school cases of using XML Schema annotations. It allows the well-informed client (as in .NET client, VS 2008 or svcutil.exe) to utilize the semantic meaning if it understands it. If not, no harm is done since the best possible representation, in a form of joined name value pairs still goes through to the client.